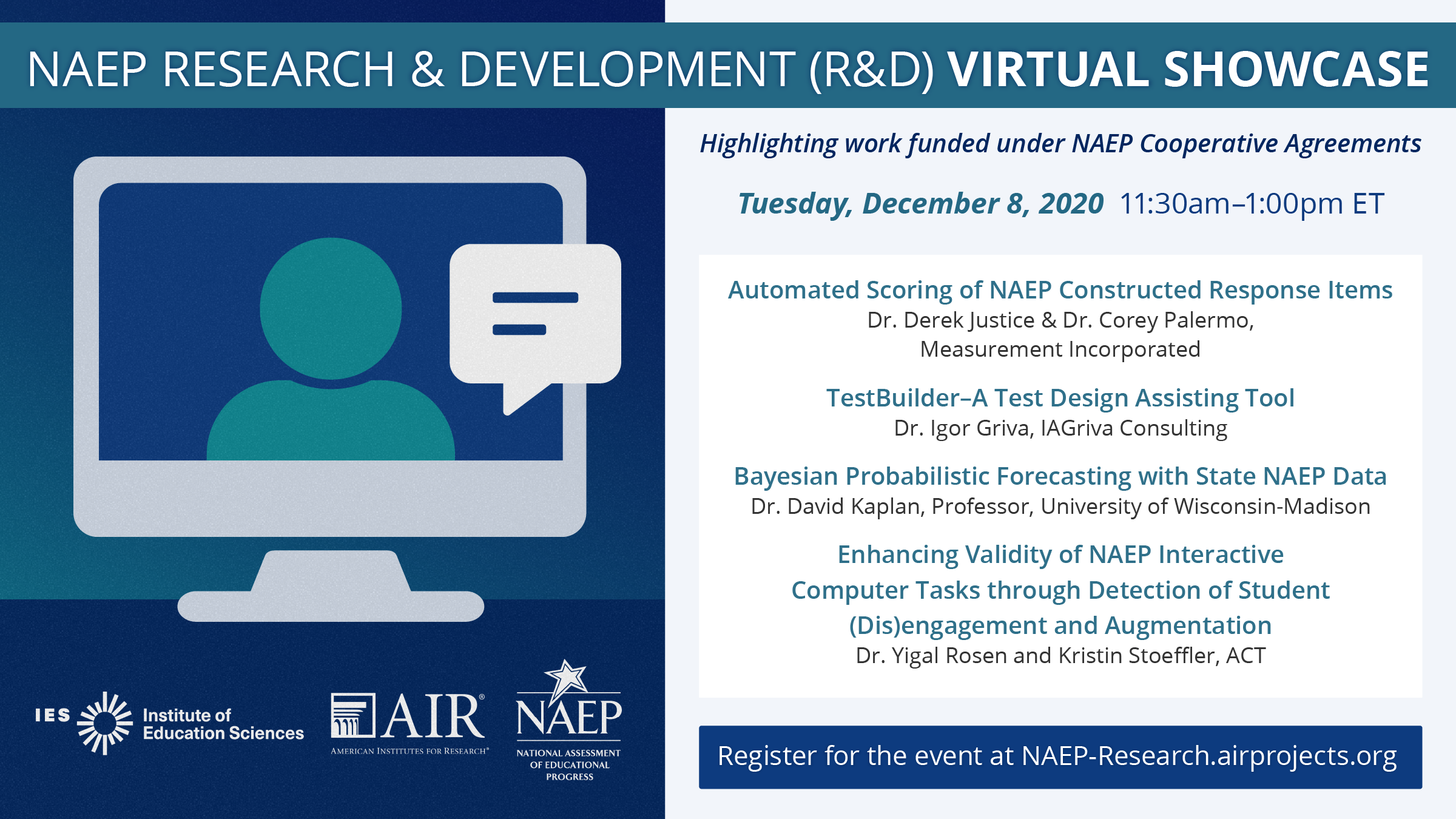

NAEP Research and Development (R&D) Virtual Showcase

On December 8, 2020, the NAEP Research and Development Program hosted a virtual showcase where awardees presented the work funded under the Cooperative Agreements Program.

On December 8, 2020, the NAEP Research and Development Program hosted a virtual showcase where awardees presented the work funded under the Cooperative Agreements Program.

About the Cooperative Agreements

The Cooperative Agreement program is part of the NAEP Research and Development Program and funded by the National Center for Education Statistics (NCES). This cooperative agreement opportunity is one avenue in which NAEP continues to pursue its goal of accurately measuring student progress and advancing the field of large-scale assessment. The goal of the NAEP Cooperative Agreements is to provide opportunities to address known challenges and bring new ideas and innovations to NAEP.

AIR, on behalf of NCES, solicited applications for funding this work, which was conducted collaboratively with NCES NAEP staff and their contractors. Funds under this program support development, research, and analysis to advance NAEP’s testing administration; scoring; analysis and reporting; secondary data analysis; and product development.

Agenda

Each presentation included a brief Q&A from an expert panel. Click below to read more about each presentation, watch the webinar, and download Q&A.